Introduction

At VGS, reliability isn't just a "nice-to-have" - it's a fundamental requirement, especially as we serve and partner with enterprise customers who demand SLAs for critical payment data flows in excess of four nines of availability. For a long time VGS services have been utilizing a multi-site active-active architecture within a given region using Availability Zones (AZ), with database snapshots copied to our recovery region. In the last few years, we've recognized the need to invest and elevate our infrastructure's resilience to meet the increasing demands of our customers such as building a platform that is able to recover when region level failure events occur. This meant tackling a crucial challenge: implementing a robust Cross-Region Disaster Recovery (CRDR) setup. In the following blog post, we detail our journey, the challenges we faced, and the solutions we implemented to achieve enterprise-grade reliability.

The Drivers: Enterprise Readiness and Unwavering Availability

Our enterprise customers expect high availability, business continuity, and compliance with industry regulations, making Disaster Recovery (DR) a non-negotiable requirement. As such, our decision to invest in CRDR was driven by a clear vision: to ensure unwavering availability and data integrity for our enterprise customers. These customers operate mission-critical applications where downtime, no matter how remote the possibility, is simply not an option. We needed to demonstrate our commitment to business continuity and provide the peace of mind that comes with a robust disaster recovery strategy.

Challenges of Cross Regional Disaster Recovery

Standing in our way, was the simple fact that we choose to only strategically invest our resources where they have the greatest impact. As a company that's building a sustainable business we didn't want to have to build a new team to manage a new environment, nor did we want to have to pay more than necessary to our cloud provider to have this environment standing unused. This work needed to pay us back outside of the immediate effort by paving a road for future work.

We already maintain six environments that applications are deployed across, potentially doubling the number of releases that we make for each change would cause even the most masochistic of engineers to reconsider their life choices. We knew that we needed to design an architecture that allowed engineers to move as quick or quicker than they do today.

With almost a decade of operations under our belt it's safe to say that there have been a couple of less than stellar decisions made when time pressure has crept in. The result of this is some cultural changes that really need to be enforced in order to ensure the consistency and repeatability of what we build. As a collective group it would be a challenge for us to build environments that are fully automated and resist the temptation of putting fingers on the keyboard to manually provision this, that, or the other.

Finally, in order to bring traffic across multiple regions we needed to rethink our edge traffic and database architecture and find a solution that would seamlessly move traffic and data across regions without struggle.

Designing for Resilience: Partnering with AWS, and leveraging Infrastructure as Code, DevOps, and Release Automation

To guide our implementation, we turned to the AWS Well-Architected Framework, specifically focusing on the Reliability pillar. This framework provided a structured approach to designing and building resilient systems. We also leveraged the principles outlined in "Infrastructure as Code" by Kief Morris to ensure our stacks were modular, maintainable, and repeatable and ensured we followed common DevOps recommendations as espoused by DORA to guide our designs to ensure deployments are inexpensive, repeatable and consistent across environments.

Key Design Principles:

- Regional Redundancy: Design and deploy key services to be provisioned across multiple AWS regions to ensure failover capabilities.

- Warm Standby: We focused on developing our secondary environment to have a warm standby capability (as opposed to a pilot light or backup and restore strategy). This means it's capable of processing a transaction at any point in time, even before a failover has been invoked. This choice was also informed by our business objective of four nines uptime, which affords one just about 52 minutes of downtime in a year. While we don't think we will suffer a disastrous situation such as a region-level failure in AWS on a yearly basis, we chose this from the mindset of “hope for the best, prepare for the worst”. As you can see from the infographic below, Warm Standby offers RPO / RTO in minutes vs. say Backup & Restore that can take hours.

- Elastic Compute: Use autoscaling and other elastic compute capabilities to rightsize capacity to the current workload.

- DevOps Automation: A judicious use of scripts and automations to make the provisioning, deployment, and (crucially) re-provisioning of components trivial. This theme constantly showed up as we demanded everything we created be inexpensive, consistent and repeatable.

Overcoming Challenges: Proliferation and Release Management

One of the major hurdles we faced was the potential for a proliferation of deployments. With six existing environments and the addition of a secondary region, we risked creating a complex and unmanageable infrastructure. To address this, we focused on:

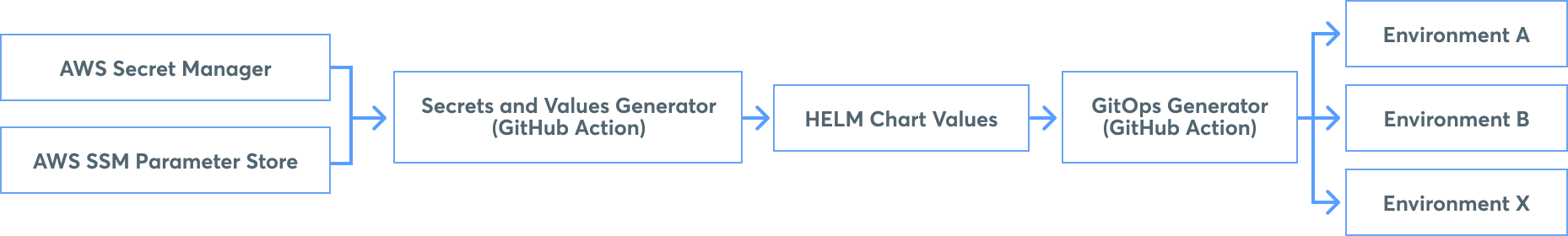

- Improved Release Automation: We developed enhanced release automation tooling that enables consistent and efficient deployments across all environments. This ensured that changes could be rolled out reliably and predictably, minimizing the risk of errors. Every application that's certified to run in our secondary regions has to be provisioned programmatically using scripted methods starting from the application's secrets and values which are pulled from IaC outputs through to the generation of GitOps code and individual application rollouts.

- Modular Infrastructure as Code (IaC): We designed our IaC to ensure each component was modular. This allowed us to reuse components across environments and reduce the amount of bespoke coding required. One key aspect to this was the introduction of a boilerplate code generator that allowed us to consistently reproduce stacks for our environments without the copy'n'paste approach that had plagued our previous IaC efforts.

A Complementary Improvement: Automating Secrets and Values Provisioning

To streamline our release process, we automated secrets and values provisioning. Non-sensitive IaC outputs, such as IAM roles, are stored in AWS SSM Parameter Store, while sensitive outputs, like database or API credentials, are stored in AWS Secrets Manager. These values are automatically injected into each service release, eliminating manual setup across all environments.

Automating the management of IAM ARNs and other complex or sensitive values across multiple environments significantly reduced engineering toil. This investment in automation dramatically simplified subsequent instance provisioning in our secondary region compared to our legacy IaC.

We used GitHub Actions to create workflows that query AWS and generate identical Helm release values for all environments. This ensures engineers perform the same release process for any change, whether it's an application upgrade or a new value.

Cost Optimization: Elasticity and Smart Resource Allocation

Cost control was paramount throughout this project. We implemented several strategies to optimize resource utilization and minimize expenses:

- Elastic Compute Capacity: We leveraged the elasticity of AWS services to dynamically scale our compute resources based on demand. This allowed us to run minimal compute capacity in the secondary region and automatically scale up when needed during a failover. This was applied to our Kafka, PostgreSQL, and application pods. AWS recently released an improvement to Aurora Global which allowed us to provision a secondary region database which scales to zero when not being used. This enhancement was crucial in allowing us to cost effectively implement a Warm Standby architecture as opposed to a Pilot Light implementation where we would have run a headless database instance. Thanks AWS!

- Automated Cost Monitoring: We implemented automated cost monitoring and alerting to track our spending and identify potential areas for optimization. While this has been nascent, the use of custom labeling and tagging has been a crucial approach to solving this and is useful for seeing costs by environments, AWS accounts and services and driving decisions within the business.

Traffic and Data Routing: Re-architecting for Cross-Region Resilience

The final challenge we addressed was routing traffic and data across both the primary and secondary regions.

Traffic required us to re-architect how traffic came in and to redesign DNS within our application clusters. We leveraged AWS Global Accelerator (AGA) to dynamically route traffic to both regions with a region-agnostic Point of Presence (POP) to accept traffic and move DNS out of our Kubernetes clusters, which had the added benefit of simplifying our application deployments. With no more DNS required in our applications we hope to see more resiliency as we minimize the chance of a common source of outages.

For data we are fortunate; Our data stores are all Kafka, PostgreSQL, and Redis. Both our Redis and Kafka workloads do not require replication so we dodged a bullet on needing to solve for them. Our PostgreSQL workloads however must be replicated and using AWS Aurora Global was a great way to get cross-regional replication at a price that was acceptable for the business. Unfortunately for us, we leverage AWS RDS Proxy and we've found the two products don't play nice together. What we discovered is that we cannot leverage write forwarding and use RDS Proxy, this means for our secondary environment to accept write transactions we must bypass RDS Proxy and connect directly to the PostgreSQL instance. We hope there's a product enhancement coming but until then we've needed to have our secondary region directly connect to our postgres instance instead of being proxied to take advantage of Aurora Global's write forwarding.

Leveraging Existing IaC and DevOps Workflows

Fortunately, our infrastructure team has already invested heavily in IaC and DevOps practices. The last two years have been a determined effort to migrate to a single IaC tool with Terraform ultimately winning the battle and becoming our single source of truth. This, along with improvements in automated tooling, allowed us to:

- Accelerate Development: We were able to leverage existing IaC modules and improved designs and workflows to accelerate the development and deployment of our CRDR solution.

- Improve Consistency: Our new IaC practices ensured consistency across all environments, reducing the risk of configuration drift.

- Automate Provisioning and Management: We leveraged automated tooling to provision and manage our infrastructure, reducing manual effort and the potential for human error.

As much as anything technical, this has been a huge cultural shift for our team as we resist the temptation to click-ops changes when an IaC component is broken. We've made huge strides here and expect to see this continue in the future as we further move towards fully immutable infrastructure to solve our largest source of severity 1 incidents at VGS.

One benefit that's been advantageous is that through improved automation our ability to test and iterate has increased. All of these improvements have been through the test gauntlet multiple times including in production environments that are exposed to customers. At VGS, we believe that the ability to iterate and experiment is key to velocity. In turn, every tool that makes it easier for us to do what we do daily is a time saver that allows us to spend developing features for customers.

Our ultimate goal is to see the ability to tear down and reproduce any IaC stack consistently and repeatedly across all environments at VGS.

Conclusion: A Future-Proof DR Strategy

Our team has made a massive effort and we are now starting to reap the benefits. Over the last two years our focus on IaC and process has massively improved our platform stability and now with the previous year's focus on automation and tooling we're starting to see a platform that's not only more stable but has continuously improving unit economics and better developer velocity. It certainly helps that the codebase becomes more enjoyable to work on as we untangle some of the more complex parts of our infrastructure and rebuild them. This CRDR effort was executed by a squad of talented engineers who came together to collaborate and relentlessly execute (and debate and argue if we're being honest 😊) on a goal.It is a true testament to our ability to achieve an ambitious goal when we focus our efforts. Without the people involved in this effort it would have been nothing but an idea as much of this was designed and solved for as problems arose.

We're now in a great place to take the learnings from our secondary region enablement and start to bring them into the existing environments. In short, we're looking forward to what the future holds.

Building a cross-region disaster recovery setup is a complex undertaking, but the benefits are undeniable. By investing in reliability, automation, and cost optimization, we have successfully improved an enterprise-grade platform that can withstand even the most challenging scenarios that our customers throw at us. We are just shy of 4 billion cards stored in our platform, and are proud to call VGS home to some of the biggest and most demanding customers in the industry, many of which are household names that we engage with regularly in our lives outside work. It is a motivating circle of innovation!

We encourage you to share your experiences with disaster recovery and infrastructure resilience. We're always eager to learn from others and continue improving our own practices.