Recently, while enjoying my post-nuptial bliss, my now wife 'volun-told' me to be in charge of planning our honeymoon in Europe. This was the first time, and not the last, that I became fully convinced that the market hype surrounding AI platforms wasn't simply a passing fad and I could possibly use it to my advantage.

Trip planning isn't my specialty. I needed a starting point, and I was curious about what options were out there that were in line with my and my wife's preferences. Fast-forward to a few ChatGPT prompts later, and in seconds, I had four fully planned, fully personalized, two-week itineraries to compare from Italy, Spain, Portugal, and the South of France—and I'm sure I could have added many more. Magic.

For someone who is a relatively early adopter of new technologies, I was surprised I hadn't used AI tools outside of work previously. Like many of the now billions of people that are actively using AI, I discovered a use case in one setting (the workplace), but the trend hadn't seamlessly transitioned into my personal life. But now it has arguably integrated too well! In almost every facet of my life, I generally can't restrain myself from taking every possible question in my head and typing yet another prompt into ChatGPT.

This dramatic transition in my personal life now mirrors how I've felt payments have, at first, gradually and now suddenly, have become the next hot topic for embedded software within AI Platforms and Agents.

Going back to my honeymoon planning example, what struck me was that payment options were nowhere to be found in this flow (yet). If I wanted to, I couldn't actually book my flights or hotel seamlessly, and instead I'd be met with a website redirect and would need to type in my card information for every single step.

That made me wonder, what will be needed to complete that 'last mile' of payments? How would the vast number of parties in this fast-growing ecosystem, including AI platforms, AI agents, developers, users (like me), and merchants, all connect in a transaction?

AI and Agentic Payments

Now, as a self-proclaimed “payments nerd”, it was natural for me to start thinking about how all of these parties need to connect. In order for agentic commerce to truly scale, the payment experience has to be seamless for the consumer on the frontend and scalable for any merchant or platform on the backend. A truly universal commerce platform would need to ensure a consumer can buy any product, from any merchant, using any payment method - much easier said than done. A few immediate considerations come to mind:

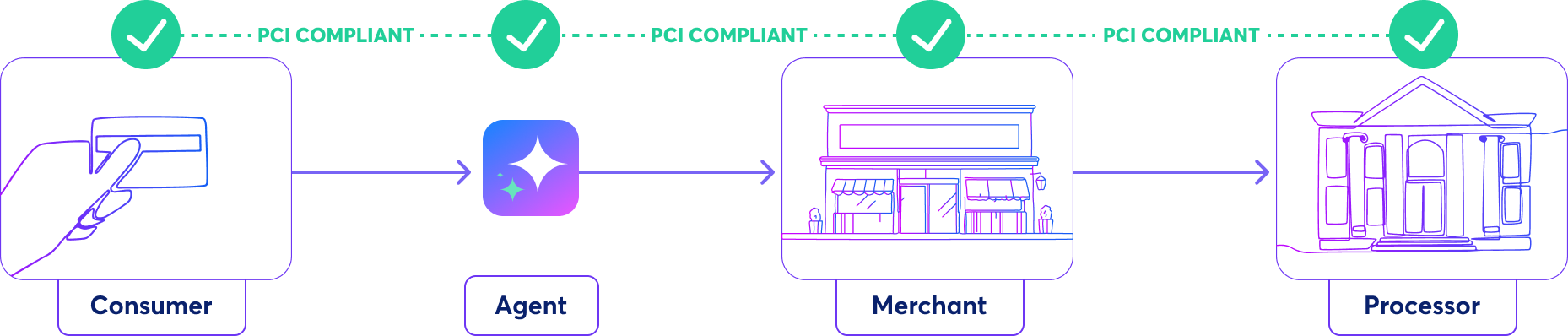

- AI agents need to collect card information, but that means they have to be PCI-compliant (or hint: encrypt and tokenize the card data)

- An agent would need to pass the stored card information to the merchant

- A Merchant needs a way to both receive the data and pass the card information to their desired PSP (Payment Service Provider)

- An AI agent needs a way to store the card information for subsequent purchases

One thing that my past years at a global card network (Visa) taught me is that payments are complicated. And there aren't many tools in the ecosystem that can connect all of those dots, especially in a fast-growing, emerging vertical like AI. It then struck me: tokenization is more critical than ever. It can act as both a frictionless translation layer between parties and a secure programmable vehicle to complete payments.

If you think about the payment compliance problems for agents I listed above, one of the biggest barriers to making payments work with agents is the need for multiple parties to be PCI (Payment Card Industry) compliant if they are going to receive, send, or store raw card information. And for those that are just learning about PCI compliance, the short overview is that it is a required compliance posture to manage payments. And PCI requires deep security management for any system that even remotely touches sensitive payment card data (16-digit PAN, CVV, etc.). It's complex, it takes deep expertise to manage, it costs a lot to maintain, and it only gets more difficult as you scale. Tokenization (what VGS does and leads globally) is first and foremost a powerful way of addressing PCI compliance and removing that burden from our customers' shoulders, and placing the burden on us instead.

Just to prove a point, let's first imagine a world where AI platforms or merchants are fully PCI compliant and willing to take on raw card data from a third-party agent. Not likely, but stay with me for now. Even if that is the case at this point, we would have only solved half of the problem; the consumer's chosen agent also needs to be PCI compliant. Tokenization solves for this: the Agent first tokenizes the Card information and can now store the non-sensitive token for subsequent purchases, and later, can pass along the token to a merchant or the merchant's payment processor directly. In this case, the Agent is fully PCI compliant, all the Agent is storing or transmitting is simply non-sensitive hashed data (a token) generated by a token provider (e.g., VGS).

Generating tokens that can be programmed and sent to any permissioned API endpoint is something very few companies in the ecosystem can do today. But VGS tokens were built by nature to be transmitted to third parties. Let's break down how VGS fits into the payment ecosystem of AI platforms.

Tokenization for AI and agentic payments

Most tokens in the market are only built to be stored and encrypted at rest. VGS is a PCI Level 1 Compliant infrastructure platform and uses patented technology to transmit payment data to any third-party (via VGS Proxies). We power payments data to move 40 B+ times a year - by far the leading neutral token service provider. VGS can do this across any platform or merchants' existing integrations with payment providers. No additional integrations or coding needed. VGS has sent tokens to over 1,000 unique endpoints in the past 90 days alone. No other platform in the world is built to securely transmit data with this much reach, control and flexibility at scale.

Sensitive data protection

More sensitive data now needs to be passed across more parties. AI platforms and merchants need a universal method of protecting data that is brought into their environments and the control to set or delete data as needed.

VGS tokens are programmable and domain-controlled by nature, meaning platforms can assign a token limited to a specific processor or merchant or to a specific shopping use case. Tokenization is the best programmable mechanism for trust and security.

Tokens can and will be used for agentic commerce, more specifically, network tokens.

Network tokens are uniquely generated by card networks (like Visa, Mastercard, American Express, and Discover) for a specific card and a specific merchant.

With connections and certifications to all four global card networks, VGS takes tokenizing and protecting sensitive data seriously.

Additionally, VGS is the first to enable payment tokenization for agentic commerce with platform partners like PayOS and Nekuda.

Processor Independence

If AI agents wish to conduct payments across any merchant, they must first use a platform that can conduct payments across any processor. Every existing merchant has its own processes to support payments. Using VGS means the ability to pass through data without an agent creating a new integration and without requiring a merchant to change their payment process.

Payment provider independence is foundational for AI payments and agentic commerce.

To put it plainly, payment provider independence is foundational for AI payments and agentic commerce. With VGS, AI platforms that are at a rapidly growing enterprise scale are also allowed to enable their own orchestration and routes; AI platforms can rest assured that they achieve processor independence with VGS as a partner.

Lifecycle management

If agents are going to process the very first payment and store the card on file for subsequent purchases, card lifecycle management is incredibly important. After all, estimates are that over 4 million credit cards expire yearly. That's a lot of credit cards to update.

VGS connects directly to the networks to keep payment credentials updated with Network Tokens and Card Account Updater. Both network tokens and card account updater automatically update cards in real time to limit cart abandonment due to outdated cards being saved on file with the agent. Unlike payment processors, VGS provides these services independently. In fact, when used correctly, VGS customers have seen up to an additional 6% boost to approval rates and conversion across any payment processors.

Learn more about the VGS Card Management Platform

Learn MoreVGS customers have seen up to an additional

6% BOOST

to approval rates and conversion across any payment processors.

The last mile? That takes PCI compliance

The speed at which PCI data will be transmitted is becoming greater than ever. AI platforms and agents are not PCI compliant by nature, but they don't need to be. If a token is passed (rather than the underlying payment card data), all parties in the ecosystem are less exposed to compliance and security risk. That means sensitive data NEVER touches any AI platforms' systems, so they remain PCI compliant, and hackers/fraudsters also will never get access to the underlying payment data, keeping consumers' payment information safe and secure.

Enabling payments in agentic commerce

VGS is at the forefront of enabling AI commerce platforms for a reason, we believe they can power the future of e-commerce.

Learn More